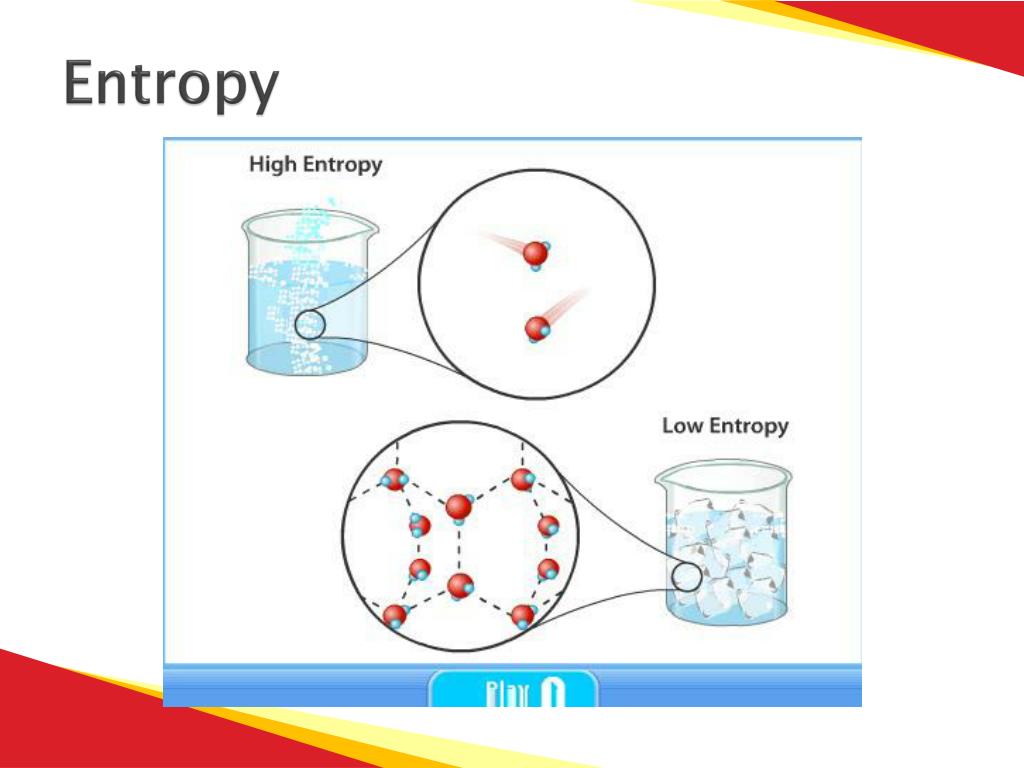

The kelvin is the SI unit of temperature and is based on the Kelvin scale, which uses the same incremental size as the Celsius scale but starts at absolute zero.Įntropy is a thermodynamic property that quantifies the degree of disorder or randomness within a system. The joule is the SI unit of energy, and it represents the amount of energy transferred or converted when a force of one newton acts over a distance of one meter. This unit combines the unit of energy, the joule (J), with the unit of temperature, the kelvin (K). In the International System of Units (SI), the unit of entropy is the joule per kelvin (J/K). Replicate = false % = false % Use true for debugging only.The unit of entropy depends on the system of units being used. Summary = struct('mean', mean(entropy), 'sd', std(entropy)) Īnd then you just have to % Entropy Script % TO DO Compute the mean for each BIT index in trial_entropy %p0 = 1 - p1 % calculate probability of number of 0'1īit_entropy(b) = -p1 * log2(p1) - (1 - p1) * log2(1 - p1) %calculate entropy in bits P1 = sum(s = 1) / length(s) %calculate probaility of number of 1's Tent_map = zeros(b, 1) %Preallocate for memory managementįor j = 2:b % j is the iterator, b is the current bit P0 = 1 - p1 % calculating probability of number of 0'1Įntropy(t) = -p1 * log2(p1) - (1 - p1) * log2(1 - p1) %calculating entropy in bitsįirst you just function = compute_entropy(bits, blocks, threshold, replicate)īit_entropy = zeros(length(bits), 1) % H P1 = sum(s = 1 ) / length(s) %calculating probaility of number of 1's Shall appreciate help in correcting where I am going wrong. I am unable to get this curve (Figure 1). The nature of the plot of entropy vs bits should be increasing from zero and gradually reaching close to 1 after which it saturates - remains constant for all the remaining bits.

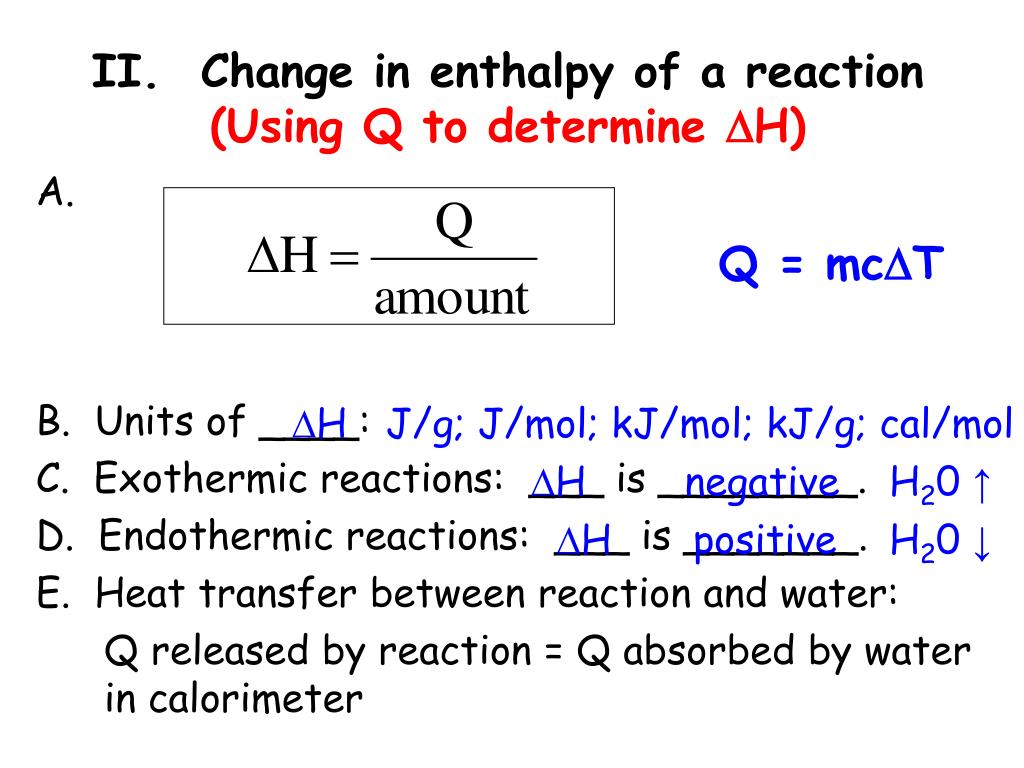

#Entropy unit code#

This appraoch is adopted to check at which code length the entropy becomes 1. Entropy calculation for each code length is repeated N times starting for each different initial condition of the dynamical system. For each code length, entropy is calculated.

#Entropy unit series#

In the revised code, I want to calculate the entropy for length of time series having bits 2,8,16,32. However, my logic of solving was not proper earlier.

#Entropy unit update#

UPDATE : I implemented the suggestions and answers to correct the code. Shall be greateful for ideas and help to solve this problem. But, the loop if entropy(:,j)=NaNĭoes not seem to be working. To prevent this, I thought of putting a check using if-else. Problem 2: It can happen that the probality of 0's or 1's can become zero so entropy correspondng to it can become infinity. Problem 1: My problem in when I calculate the entropy of the binary time series which is the information message, then should I be doing it in the same base as H? OR Should I convert the value of H to bits because the information message is in 0/1 ? Both give different results i.e., different values of j. The objective of the code is to find number of iterations, j needed to produce the same entropy as the entropy of the system, H. It is known theoretically that the entropy of the system, H is log_e(2) or ln(2) = 0.69 approx. The code should exit as soon as the entropy of the discretized time series becomes equal to the entropy of the dynamical system. Iterations of the Tent Map yields a time series of length N. The random variable denotes the state of a nonlinear dynamical system called as the Tent Map. I want to calculate the entropy of a random variable that is discretized version (0/1) of a continuous random variable x.

This Question is in continuation to a previous one asked Matlab : Plot of entropy vs digitized code length

0 kommentar(er)

0 kommentar(er)